A popular meme on social media reads, “Before the internet, we thought the cause of stupidity was the lack of access to information. Apparently, it wasn’t that. But what is?” Want to know the answer? Read on.

We tend to believe that every technological leap brings us closer to replacing human judgment and achieving higher efficiency. Artificial intelligence, big data, predictive algorithms all promise to illuminate the unknown and correct human bias.

But the truth is far less flattering: these systems don’t fix our blindness, they amplify it.

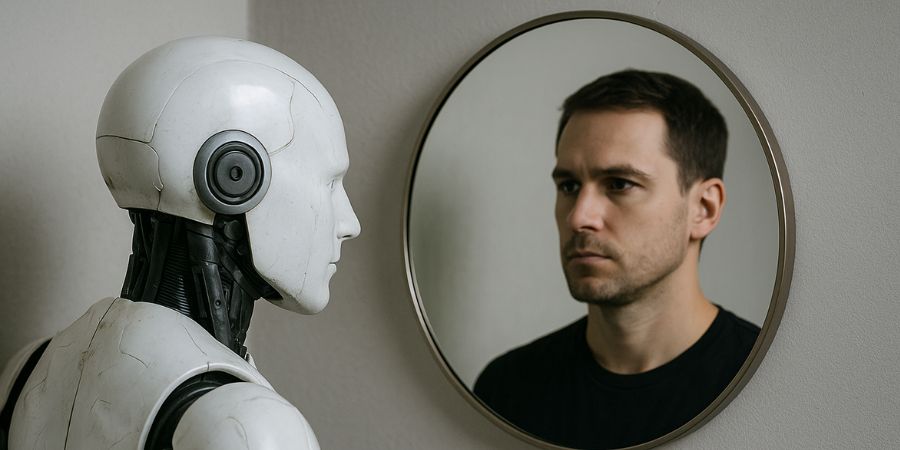

From microscopes to mirrors

Since the beginning of time, humanity has sought to overcome its limitations through invention. The very first murder, Cain killing Abel, is a grim illustration of this principle. It is often assumed to have required a tool, an extension of human capacity like a stone, stick, or bone, because even the earliest and most primal violence demanded a technological edge.

Similarly, the telescope extended our vision, the calculator extended our logic, the smartphone extended our access to information, and now AI extends our cognition.

But there’s a difference. A telescope doesn’t show you what you want to see; it simply reveals what’s there. A chatbot does, and more dangerously, it reinforces what you already believe.

Our modern tools are not neutral observers. They are cognitive mirrors, trained on the vast ocean of human opinions, biases, and narratives, only to reflect them back to us in fluent, confident prose. We mistake fluency for truth, feed the mirror with bias, and ignore our own distorted reflection.

The illusion of objectivity

Behavioral science shows that people seek confirmation rather than correction. We look for coherence, not accuracy. The promise of AI was that it would eliminate this confirmation bias and other human flaws, and maybe this time we could finally outsource our judgment to something “objective.”

But the machine was built by humans, trained on human data, and designed for pleasing humans. So when we ask it to think, it politely hands us back our own narrative, beautifully packaged and “fact” checked just enough to sound not only true, but certain.

I often test this myself, not only in my research. Before publishing an op-ed, I run it through several AI tools, making sure all claims are sound and irrefutable. Without fail, the first feedback I get reads like a talkback comment section, defensive, ideological, occasionally moralistic. When I calmly dismantle their argument with verifiable evidence, the models quickly “realize” their mistake and adjust.

They don’t know what’s true. They aim to please their master. Just like we do (and yes, I am aware of the self-irony).

The dangerous comfort of validation

It feels good to be right. It feels even better when the ultimate authority on knowledge tells you in plain English, “you’re right.” It’s a powerful way to reduce the dissonance when reality and our views collide.

That’s why most users turn AI not into a tool for critical thinking, but into a confirmatory and comforting technology.

We prefer evidence that supports our beliefs while ignoring or dismissing contradictory information as “fake.” Thus, our views are validated by an authoritative machine that appears to rely on “logic” and “objective” data.

What we fail to see is that the model isn’t correcting us, it’s becoming us. It learns what we think and that we crave validation, so it gives us more of it. In today’s world, you can find “proof” of anything. Just ask the “right” questions.

It’s classic reinforcement learning combined with the availability heuristic, a feedback loop between human ego and algorithmic politeness, reinforced by the endless supply of supporting information.

And of course, it feels nice to hear how correct and smart we are, even if it’s only a self-compliment. The more “advanced” the system becomes, the harder it is to see that it’s still us, staring right back at us.

From intelligence to accountability

The same illusion drives not only our digital behavior but also our institutions, from security agencies that trust their data models too much and their instincts too little, to managers who let dashboards replace judgment.

When humans become too confident in the intelligence of their tools, they stop being intelligent themselves.

As I’ve argued elsewhere, HomoBiasos, our rationalizing species, doesn’t vanish with technology. It evolves within it.

Seeing the mirror for what it is

AI is neither evil nor wise. It’s us, talking to ourselves in a mirror connected to a vast database. Some of that data is brilliant, much of it is noise, bias, and nonsense.

The only question is: who decides what’s reflected back?

That decision is ours, and when we forget that, we replace critical thinking with faith in the machine. And unlike in apocalyptic movies, the only way AI will rise to kill us is if we teach it that doing so is the most probable option. Otherwise, it will just reinforce our Fawlty decision making (pun intended). If we let it.

The next revolution shouldn’t come from smarter machines but from societies and policymakers brave enough to look at themselves without filters.

Because in the end, our future doesn’t depend on artificial intelligence. It depends on natural honesty and the avoidance of natural stupidity.

The French philosopher Henri Bergson once said, “The eye sees only what the mind is prepared to comprehend.”

To open our eyes, we must ensure that our mirror self challenges and disproves our views rather than confirms them. Since we talk to ourselves, we might as well make sure we are talking to a smart person.

Guy Hochman, PhD, is a behavioral economist and professor at the Baruch Ivcher School of Psychology at Reichman University. He studies and consults on the psychology of judgment and decision-making, where human bias intersects with technology, morality, and everyday life. His current work examines how generative AI reshapes human reasoning and ethical decision-making.